bagging predictors. machine learning

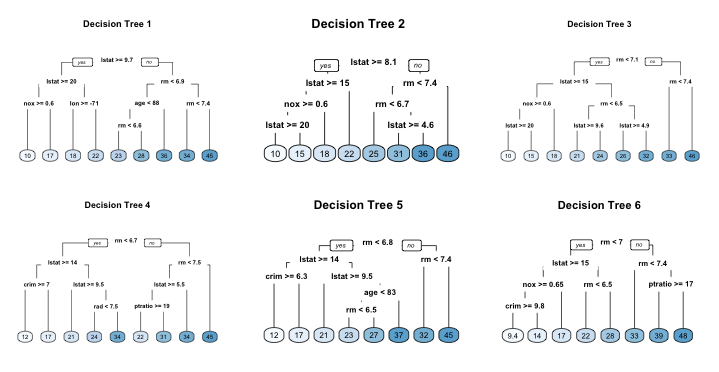

With minor modifications these algorithms are also known as Random Forest and are widely applied here at STATWORX in industry and academia. In this blog we will explore the Bagging algorithm and a computational more efficient variant thereof Subagging.

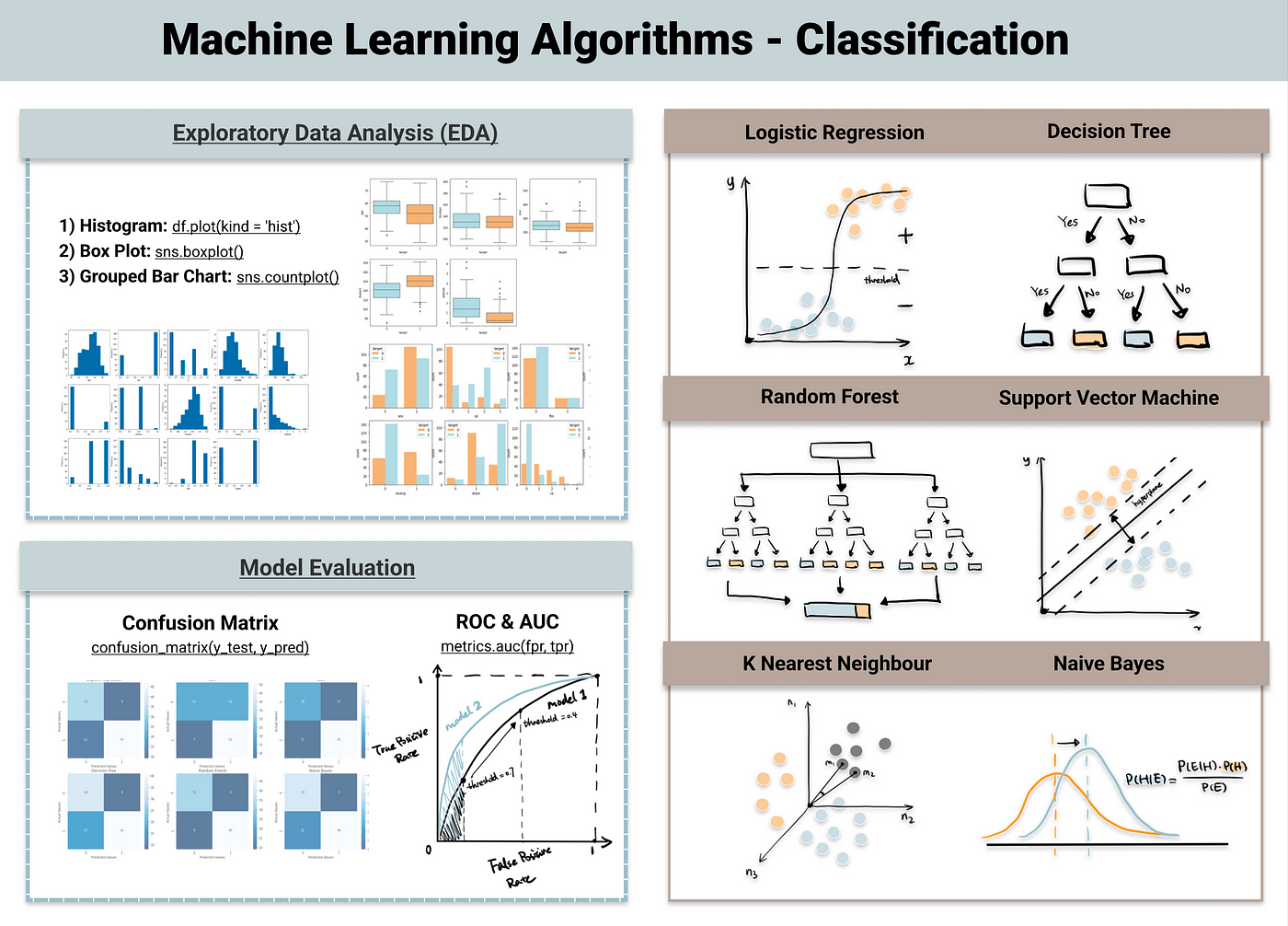

Ensemble Learning Bagging And Boosting In Machine Learning Pianalytix Machine Learning

In the above example training set has 7 samples.

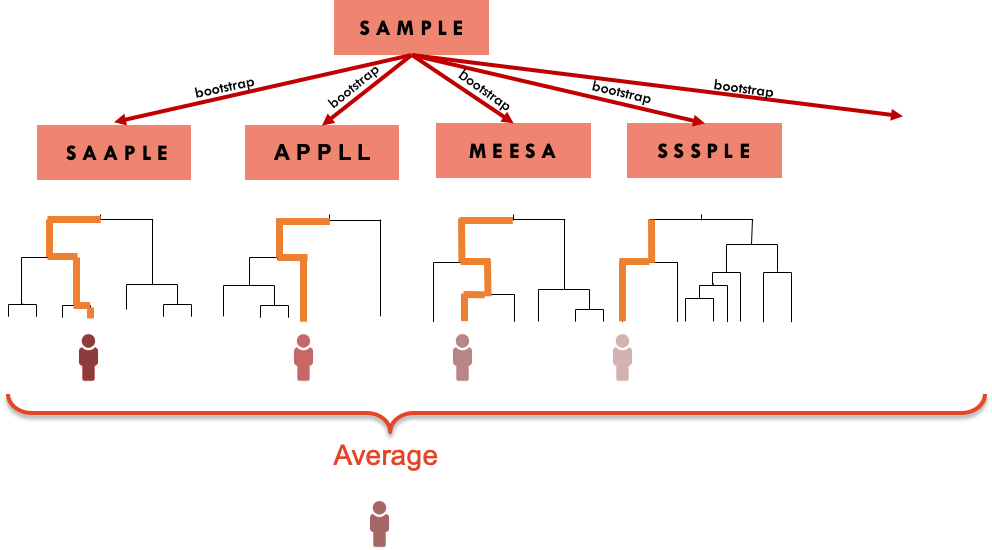

. Breiman L Bagging Predictors Machine Learning 24. This research aims to assess and compare performance of single and ensemble classifiers of Support Vector Machine SVM and Classification Tree CT by using simulation data. Visual showing how training instances are sampled for a predictor in bagging ensemble learning.

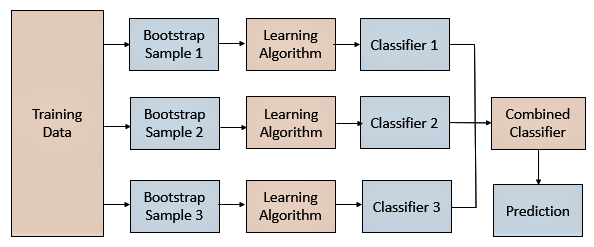

Customer churn prediction was carried out using AdaBoost classification and BP neural network techniques. Given a new dataset calculate the average prediction from each model. Bagging aims to improve the accuracy and performance of machine learning algorithms.

The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class. Blue blue red blue and red we would take the most frequent class and predict blue. Has been cited by the following article.

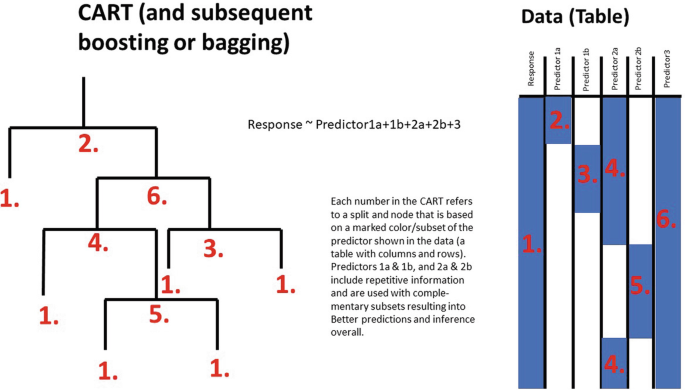

Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor. In machine learning we have a set of input variables x that are used to determine an output variable y. Bagging also known as bootstrap aggregation is the ensemble learning method that is commonly used to reduce variance within a noisy dataset.

Problems require them to perform aspects of problem solving that are not currently addressed by. Machine Learning 24 123140 1996 c 1996 Kluwer Academic Publishers Boston. Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor.

Almost all statistical prediction and learning problems encounter a bias-variance tradeoff. Statistics Department University of California Berkeley CA 94720 Editor. The simulation data is based on three.

Bootstrap Aggregation bagging is a ensembling method that attempts to resolve overfitting for classification or regression problems. Applications users are finding that real world. We present a methodology for constructing a short-term event risk score in heart failure patients from an ensemble predictor using bootstrap samples two different classification rules logistic regression and linear discriminant analysis for mixed data continuous or categorical and random selection of explanatory variables to.

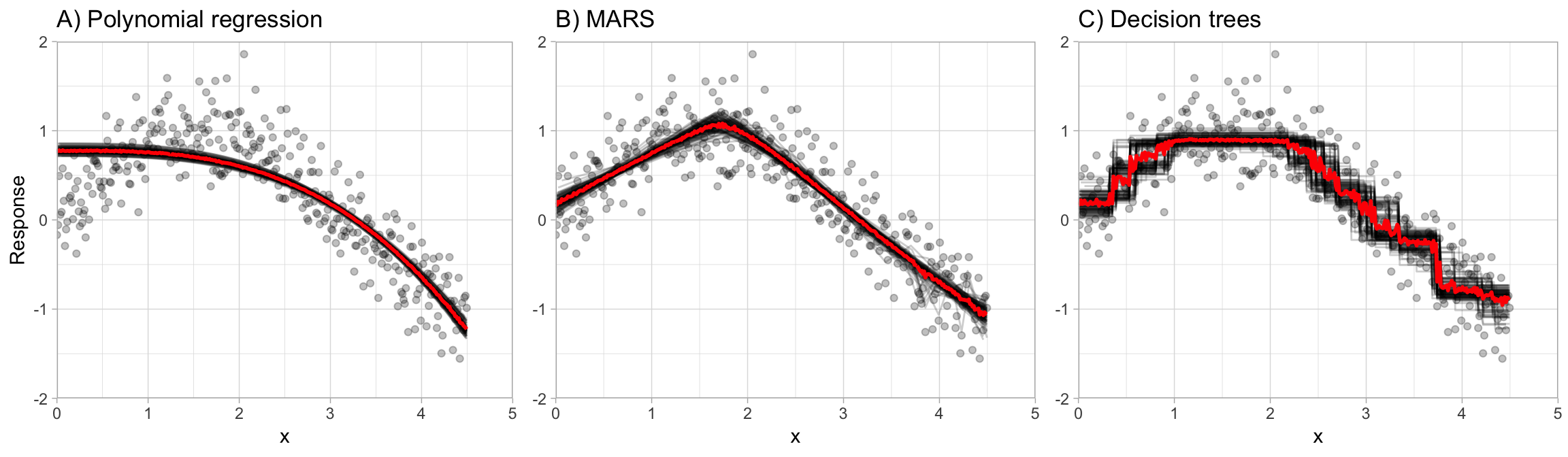

A relationship exists between the input variables and the output variable. When the relationship between a set of predictor variables and a response variable is linear we can use methods like multiple linear regression to model the relationship between the variables. Size of the data set for each predictor is 4.

Machine learning 242123140 1996 by L Breiman Add To MetaCart. Bagging can be used with any machine learning algorithm but its particularly useful for decision trees because they inherently have high. Bootstrap aggregating also called bagging from bootstrap aggregating is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms used in statistical classification and regressionIt also reduces variance and helps to avoid overfittingAlthough it is usually applied to decision tree methods it can be used with any.

Important customer groups can also be determined based on customer behavior and temporal data. In this post you discovered the Bagging ensemble machine learning. As machine learning has graduated from toy problems to real world.

For example if we had 5 bagged decision trees that made the following class predictions for a in input sample. In bagging a random sample of data in a training set is selected with replacementmeaning that the individual data points can be chosen more than once. The results show that the research method of clustering before prediction can improve prediction accuracy.

The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class. Bagging Breiman 1996 a name derived from bootstrap aggregation was the first effective method of ensemble learning and is one of the simplest methods of arching 1. Bagging predictors is a method for generating multiple versions of a predictor and using these to get an.

The goal of. Methods such as Decision Trees can be prone to overfitting on the training set which can lead to wrong predictions on new data. Manufactured in The Netherlands.

The meta-algorithm which is a special case of the model averaging was originally designed for classification and is usually applied to decision tree models but it can be used with any type of. The results of repeated tenfold cross-validation experiments for predicting the QLS and GAF functional outcome of schizophrenia with clinical symptom scales using machine learning predictors such as the bagging ensemble model with feature selection the bagging ensemble model MFNNs SVM linear regression and random forests. After several data samples are generated these.

Top 6 Machine Learning Algorithms For Classification By Destin Gong Towards Data Science

Bagging Machine Learning Through Visuals 1 What Is Bagging Ensemble Learning By Amey Naik Machine Learning Through Visuals Medium

An Introduction To Bagging In Machine Learning Statology

2 Bagging Machine Learning For Biostatistics

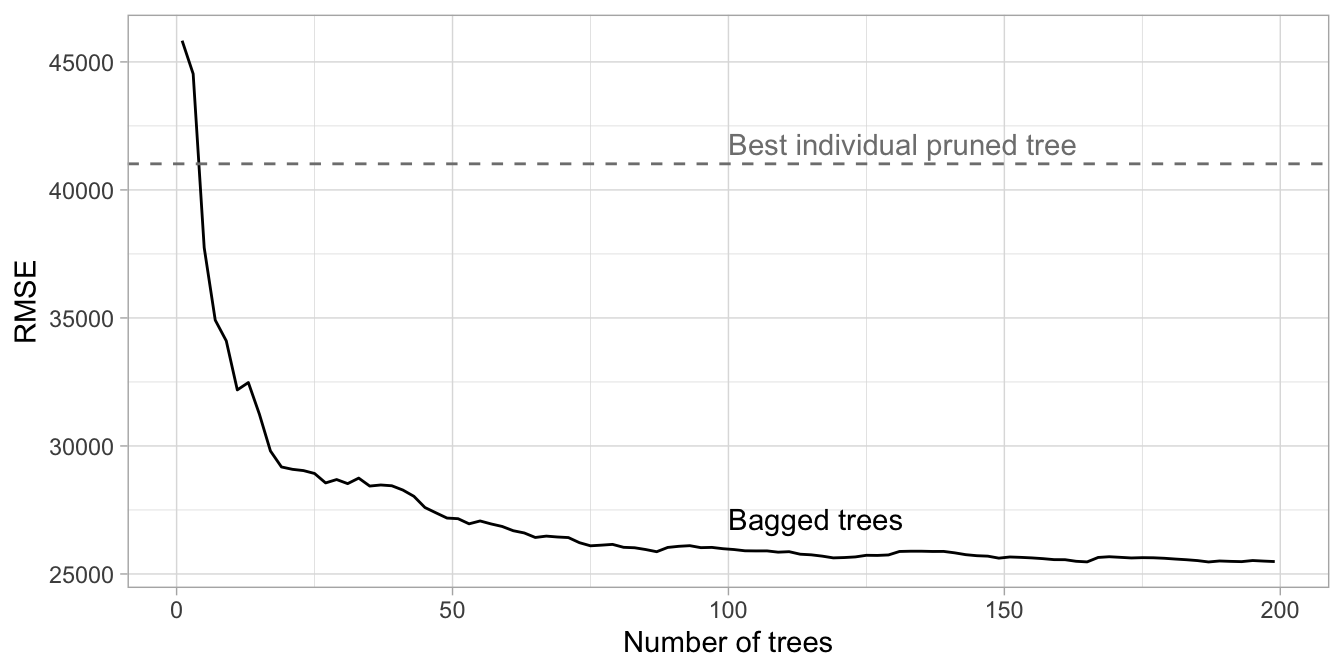

Chapter 10 Bagging Hands On Machine Learning With R

Bootstrap Aggregation Bagging In Regression Tree Ensembles Download Scientific Diagram

Machine Learning What Is The Difference Between Bagging And Random Forest If Only One Explanatory Variable Is Used Cross Validated

Bagging Machine Learning Through Visuals 1 What Is Bagging Ensemble Learning By Amey Naik Machine Learning Through Visuals Medium

Ensemble Models Bagging And Boosting Dataversity

Guide To Ensemble Methods Bagging Vs Boosting

Ensemble Methods In Machine Learning Bagging Subagging

Bagging And Pasting In Machine Learning Data Science Python

Boosting Bagging And Ensembles In The Real World An Overview Some Explanations And A Practical Synthesis For Holistic Global Wildlife Conservation Applications Based On Machine Learning With Decision Trees Springerlink

Bagging Bootstrap Aggregation Overview How It Works Advantages

Chapter 10 Bagging Hands On Machine Learning With R

An Example Of Bagging Ensemble 20 Download Scientific Diagram